This repository contains the code examples and supporting material for the Spatial Analytics Workshop held at O’Reilly Where 2.0 on March 30, 2010. Twitter is rolling out enhanced geo features. It will be a while before geo tagged tweets are widely adopted, but there is a lot we can do right now using just profile location string information.

From the Workshop description:

“This workshop will focus on uncovering patterns and generating actionable insights from large datasets using spatial analytics. We will explore combining open government data with other location based information sources like Twitter. Participants will be guided through examples that use Hadoop and Amazon EC2 for scalable processing of location data. We will also cover some basics on spatial statistics, correlations, and trends along with how to visualize and communicate your results with open source tools.”

- Launch an interactive Pig Session using Elastic MapReduce and note the ec2 address (something like ec2-174-129-153-177.compute-1.amazonaws.com)

- Get the spatialanalytics code from github and use the hcon.sh script on your local machine to set up the Hadoop web UI in a browser window (substitute the path to your own EC2 keypair and instance address)

git clone git://github.com/datawrangling/spatialanalytics.git cd spatialanalytics/ ./util/hcon.sh ec2-174-129-153-177.compute-1.amazonaws.com /Users/pskomoroch/id_rsa-gsg-keypair

- ssh into the master Hadoop instance

skom:spatialanalytics pskomoroch$ ssh [email protected] The authenticity of host 'ec2-174-129-153-177.compute-1.amazonaws.com (174.129.153.177)' can't be established. RSA key fingerprint is 6d:b1:d6:48:db:37:61:df:b6:04:4a:93:eb:2d:1d:40. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'ec2-174-129-153-177.compute-1.amazonaws.com,174.129.153.177' (RSA) to the list of known hosts. Linux domU-12-31-39-0F-74-82 2.6.21.7-2.fc8xen #1 SMP Fri Feb 15 12:39:36 EST 2008 i686 -------------------------------------------------------------------------------- Welcome to Amazon Elastic MapReduce running Hadoop 0.18.3 and Debian/Lenny. Hadoop is installed in /home/hadoop. Log files are in /mnt/var/log/hadoop. Check /mnt/var/log/hadoop/steps for diagnosing step failures. The Hadoop UI can be accessed via the command: lynx http://localhost:9100/ -------------------------------------------------------------------------------- hadoop@domU-12-31-39-0F-74-82:~$

- Install git and fetch the same spatialanalytics code on the Hadoop cluster:

sudo apt-get -y install git-core cd /mnt git clone git://github.com/datawrangling/spatialanalytics.git

- Parse Tweets with Hadoop streaming

- streaming/parse_tweets.sh

- Count global locations in parsed Tweets with Pig

$ cd /mnt/spatialanalytics/pig

$ pig -l /mnt locationcounts/global_location_tweets.pig

$ hadoop dfs -cat /global_location_tweets/part-* | head -30

- Count US based locations in parsed Tweets with Pig

- pig/us_location_counts.pig

- Visualize long tail of locations

- Load Geonames data into Hadoop & map to Tweet location strings

- location_standardization/parse_geonames.py

- City, state abbreviation mapping

- pig/city_state_exactmatch.pig

- City mapping

- pig/city_exactmatch.pig

- Expose Geonames data in a Rails search interface

- The code for the Turk task and rails app is here: http://github.com/datawrangling/locations

- Run Pig jobs to generate top locations & time zones for turkers

- pig/locations_timezones.pig

- Publish mechanical turk tasks

- rails app

- Parse mechanical turk results for use in Hadoop

- location_standardization/parse_turk_responses.py

- Merge standardized location mappings into lookup table

- Join lookup table with parsed Tweets, emit standardized tweet dataset

- Emit: user_screen_name, tweet_id, tweet_created_at, city, state, fipscode, latitude, longitude, tweet_text,

user_id, user_name, user_description, user_profile_image_url, user_url,

user_followers_count, user_friends_count, user_statuses_count

- Grep for tweets with “conservative” or “liberal” in user_description

- Aggregate by fipscode with Pig

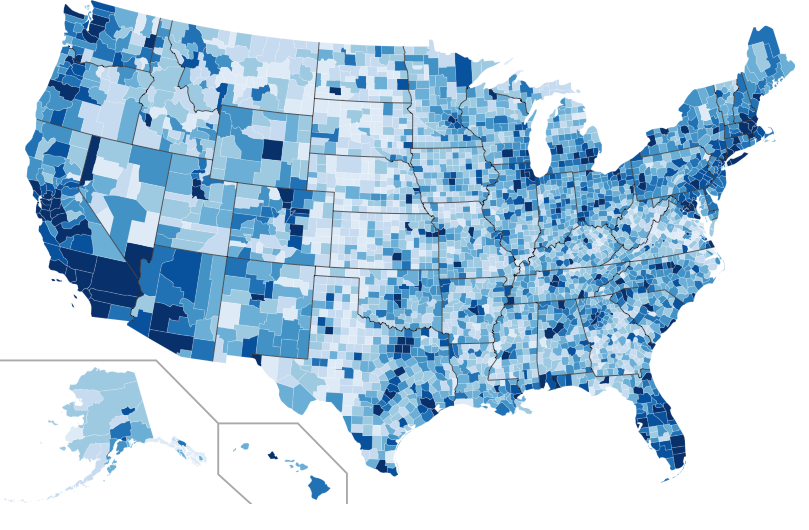

- Generate county level SVG heatmaps and compare to 2008 presidential election

- Use Pig to tokenize tweet text

- Aggregate counts at hourly & county level

- Generate time resolved heatmaps for top 100 trends over 10 days in Feb

- Explore data with quick Rails app

- Find phrases highly associated with each county

- Use per-capita conservative and liberal users by county & tweet text to classify tweets

- Load Data.gov datasets on Unemployment, Income, Voting record

- Correlate Tweet phrases to those quantities using location

- Load reverse geocode data into distributed cache, use spatial index to assign lat/lon coordinates to counties or congressional districts using Hadoop

- Use Tweet phrases associated with high crime areas to predict real time level of criminal activity based on Tweets